Performance

Storage Spaces solution can be scaled up to over a million IOPS when using SSDs and mirrored volumes. But write performance in Parity and Dual Parity still leaves much to be desired. Such configurations without write-back cache on SSD are suitable only for a narrow range of tasks with a predominant read load.

A comprehensive study of Storage Spaces' performance in various configurations was conducted by Fujitsu. It is possible to conduct some of these tests complementing the IOPS and throughput measurements with average and maximum latencies.

Test conditions:

- Two E5640 processors

- 8 Gb RAM

- Supermicro X8DTL-iF motherboard

- Adaptec 6805 controller (tests with hardware RAID), read cache enabled, write cache enabled LSI 9211-8i controller (tests with Storage Spaces, firmware P19 IT)

- HGST HUA723030ALA640 drives (3 Tb 7200rpm SATA3) :: 12 pcs for RAID-10 vs Storage Spaces 2-way mirror tests and 13 pcs for RAID-6 vs Storage Spaces dual parity tests

- 2 pcs SSD Intel 710 100 Gb for tests with tiering and write-back cache

- Microsoft Windows Server 2012 R2 Standard

- FIO version 2.1.12 was used (to generate the load)

For testing a script was used that implements a series of 60-second load rounds with various patterns and varying queue depths. A 100 Gb test file was used for all measurements except for tests with tiered storage where a file of 32 Gb was used.

For clarity we used templates similar to those used in tests from Fujitsu:

| Template | Access | Read | Write | Block size, KB |

|---|---|---|---|---|

| File copy | random | 50% | 50% | 64 |

| File server | random | 67% | 33% | 64 |

| Database | random | 67% | 33% | 8 |

| Streaming | sequential | 100% | 0% | 64 |

| Restore | sequential | 0% | 100% | 64 |

Results

RAID-10 vs Storage Spaces 2-way mirror:

Compared: RAID-10 of 12 disks on the Adaptec 6805 controller, 2-way mirror of 12 disks (6 columns), 2-way mirror of 12 disks with 2 Gb write-back cache on 2 Intel 710 SSDs and tier storage - SSD-tier from the same capacity of 40 Gb (40 Gb SSD + 200 Gb HDD).

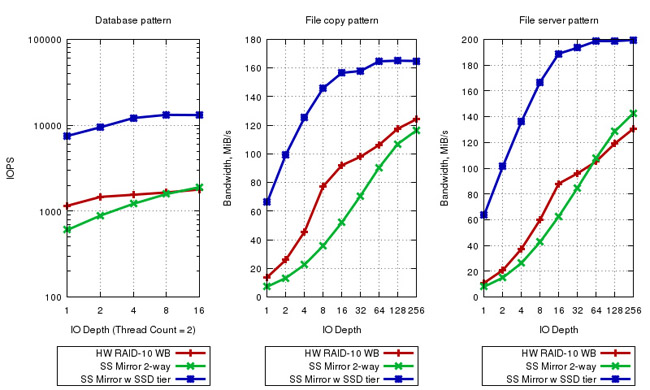

Database (IOPS), File copy, File Server (bandwidth) templates. For the Database template a logarithmic scale is used. The graphs show that the 2-way mirror is noticeably inferior in performance to the traditional hardware RAID-10: more than 2 times on the Database and File copy templates (about 1.5 times on the File Server template). Aligning the results with an increase in the queue depth of more than 16 cannot be considered from the point of view of practical use due to an unacceptable increase in delays (graphs below). A significant increase in performance is provided by using SSDs as a write cache and fast tier. Just a couple of even outdated Intel 710s today increase random access performance by small blocks by an order of magnitude. It makes sense to use this combination not only for OLTP but also for loaded file servers:

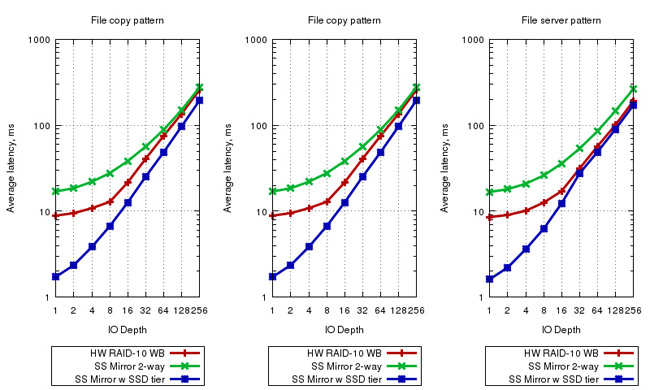

Patterns Database, File copy, File Server: average latency. No surprises: Storage Spaces loses, but naturally breaks ahead when adding an SSD:

Database, File copy, File Server templates: maximum latency. Storage Spaces demonstrates more stable values with a higher average delay: maximums with a small queue depth are smaller on the Database and File copy templates:

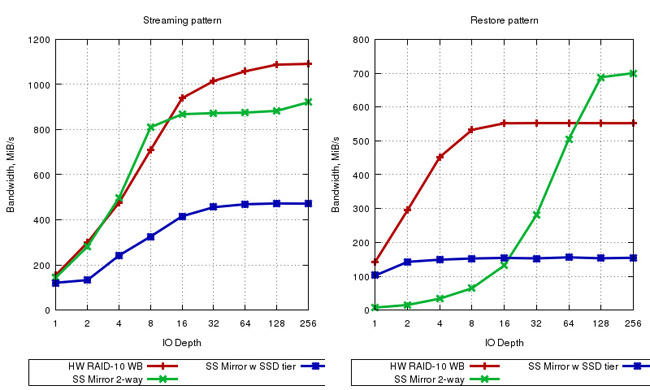

Streaming & Restore templates: bandwidth. Mirrored Storage Spaces is not inferior to hardware RAID in sequential reads but significantly inferior in sequential writes (Restore template). Growth with very high values of the queue depth (> 64) is significant only in synthetic tests because of the large delay value. Using SSD in this case is useless due to sequential access and the ratio of the number of HDD and SSD. Conventional HDDs (especially in the amount of 12 pieces) do an excellent job of sequential loading and are significantly faster than a pair of SSDs:

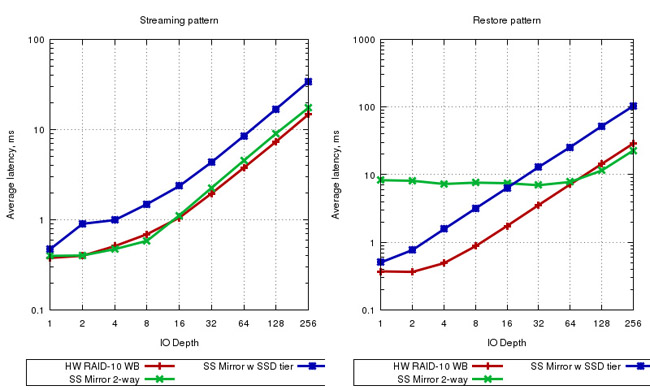

Streaming, Restore templates (medium latency):

Streaming, Restore templates: maximum latency. There is at least some benefit from the SSD in the form of stabilizing the latency value:

RAID-6 vs Storage Spaces dual parity

To compare: RAID-6 of 13 disks on the Adaptec 6805 controller and Dual Parity of 13 disks (13 columns). Unfortunately there was not enough SSDs required for use in such a pool similar to those used in the first series of tests (for Dual Parity they need at least 3). But the corresponding performance comparison with SSD tier & write-caching is in the Fujitsu study.

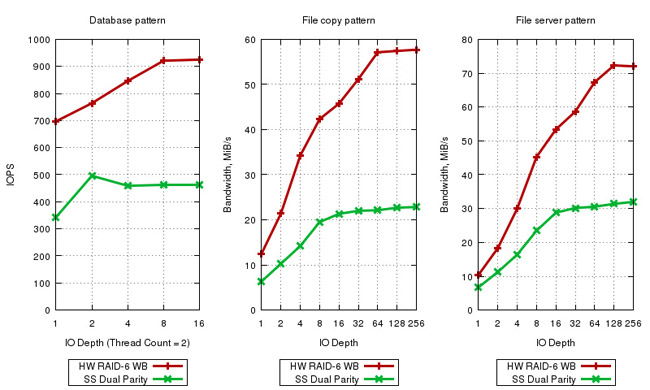

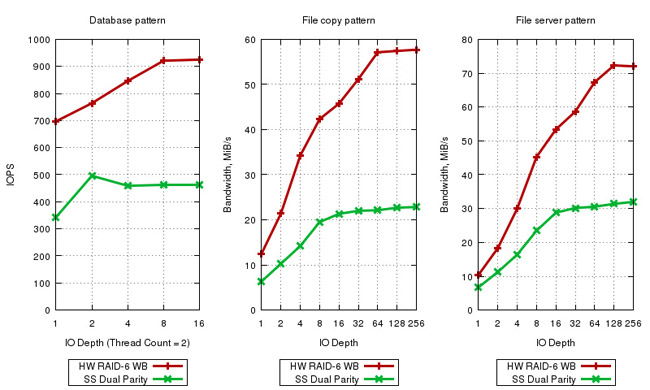

Database (IOPS), File copy, File Server (bandwidth) templates. Storage Spaces with Dual Parity significantly slower than hardware RAID in all random access scenarios. This is not surprising considering that the Adaptec 6805 has a 512 Mb RAM cache which can significantly optimize random access inconvenient for RAID-6. Among the tests conducted by Fujitsu there is the option "RAID-6 8xHDD vs Dual Parity 8xHDD + 1GB WB cache on 3xSSD" - on all 3 templates the performance when adding a WB-cache to an SSD is not inferior to hardware RAID (even without the use of tier storage):

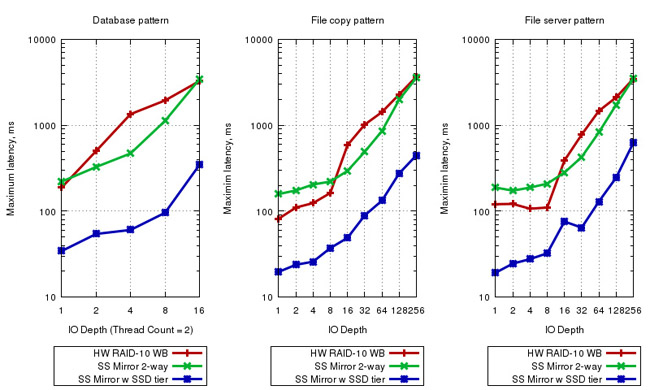

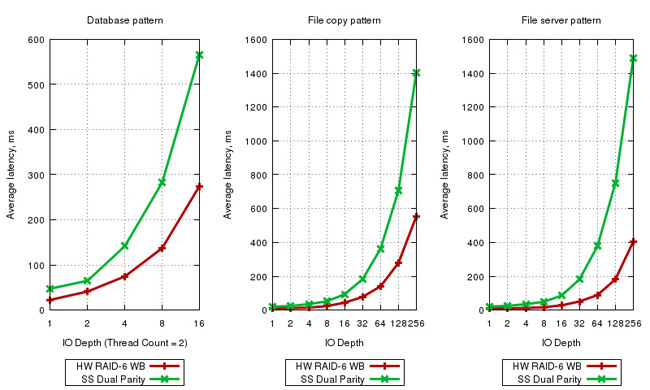

Database, File copy, File Server templates (average latency):

Database, File copy, File Server templates (average latency):

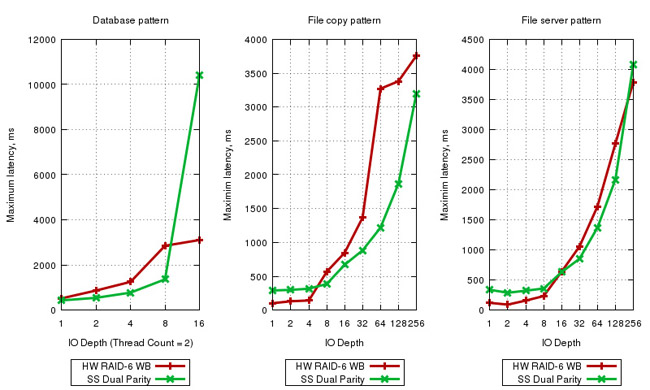

Database, File copy, File Server templates: maximum latency. The situation is similar to the previous series of tests with mirrored Storage Spaces. Storage Spaces demonstrates more stable values with a higher average latency - maximums with a small queue depth are smaller on the Database and File copy templates:

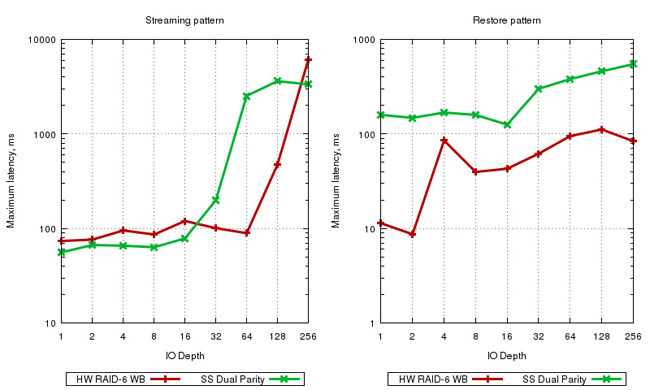

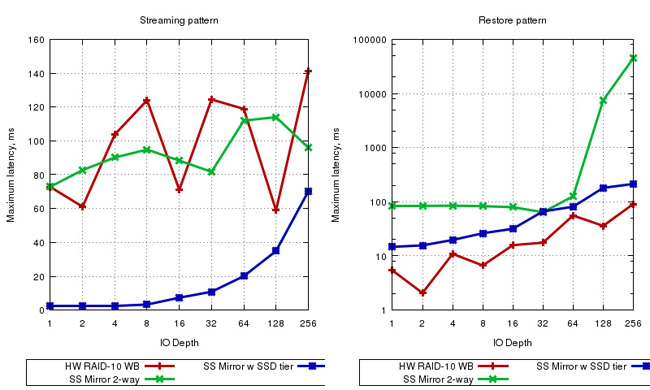

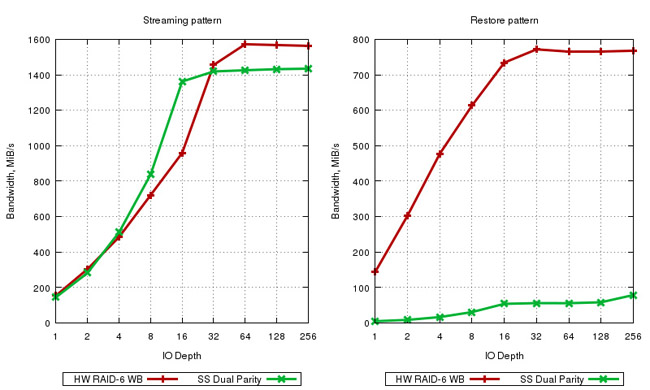

Streaming & Restore templates: bandwidth. Dual Parity does a good job on reading but sequential recording shows a well-known catastrophic situation with a lag of more than an order of magnitude. The use of even a large write-back cache (you can select the entire disks by assigning the disk Usage = Journal) on several SSDs can compensate for relatively short-term recording loads but during continuous recording (for example, when used in a video surveillance system) the cache will be full sooner or later:

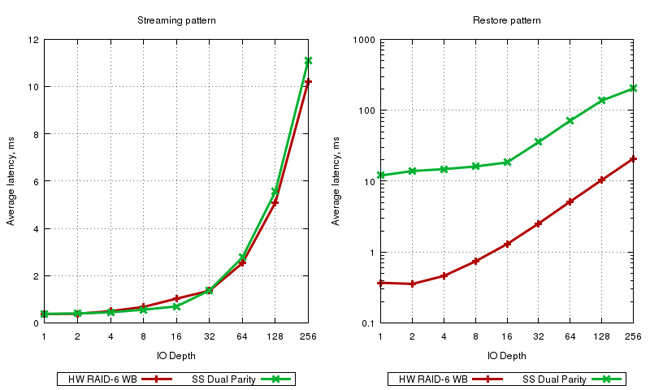

Streaming, Restore templates: medium latency. A logarithmic scale is used for the Restore template:

Streaming, Restore templates: maximum latency (logarithmic scale):